How To Scrape 1,000 Google Search Result Links In 5 Minutes

Pet Stores Email Address List & Direct Mailing Databasehttps://t.co/mBOUFkDTbE

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Pet Care Industry Email List is ideal for all forms of B2B marketing, including telesales, email and newsletters, social media campaigns and direct mail. pic.twitter.com/hIrQCQEX0b

Content

- Is It Ok To Scrape Data From Google Results? [Closed]

- Not The Answer You're Looking For? Browse Other Questions Tagged Web-scraping Or Ask Your Own Question.

- How To Scrape 1,000 Google Search Result Links In 5 Minutes.

- This Is The Best Way To Scrape Google Search Results Quickly, Easily And For Free.

Is It Ok To Scrape Data From Google Results? [Closed]

Every time you run this script with a keyword passed as an argument, it generates an HTML file containing the highest ten search itemizing of the keyword. This permits you to automate the method of monitoring search rankings without handbook effort. Trigger the API, and you must see a long array of outcomes containing the title and link of each search outcome considerably like this. Select the “POST post search” endpoint within the API console and move the JSON object, as shown under. In this case, we're looking for an “API Marketplace,” and the outcomes are limited to 100.

Not The Answer You're Looking For? Browse Other Questions Tagged Web-scraping Or Ask Your Own Question.

Let’s take a look at one of the endpoints to get a glimpse of the search outcomes returned by this API. Recently a customer of mine had an enormous search engine scraping requirement however it was not 'ongoing', it's extra like one big refresh per month.

How To Scrape 1,000 Google Search Result Links In 5 Minutes.

When you find the page number in the URL, it’s a great signal. Unfortunately, many tables and search ends in HTML pages are applied in several ways.

This Is The Best Way To Scrape Google Search Results Quickly, Easily And For Free.

This may final for some minutes to some hours, so that you immediately have to cease knowledge scraping. Update the following settings within the GoogleScraper configuration file scrape_config.py to your values. But ought to get you began with your individual Google Scraper. No, we all the time provide the most recent and most accurate information present on the web site. However, you might be free to cache the leads to your systems to cut how to scrape data from search engine back API calls and prices. With just some lines of code, you can combine our API along with your software and start receiving information as a JSON response. Save the python code as ‘serp_generator.py’ file and make sure that this file, together with the Mako template contained in ‘search_result_template.html’, resides in the same directory. Earlier, we examined the “POST post search” endpoint manually. With the requests module, you can invoke the API programmatically. In the beginning, we import the Python modules that are used in this script. Along with that, we additionally must define your RapidAPI credentials.

Search Engine Scraper and Email Extractor by Creative Bear Tech. Scrape Google Maps, Google, Bing, LinkedIn, Facebook, Instagram, Yelp and website lists.https://t.co/wQ3PtYVaNv pic.twitter.com/bSZzcyL7w0

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Plus, it’s free to use up to a certain number of requests per 30 days. This supplies a good way to check out the service and see if you are getting the info you need. It solely takes a couple of clicks an a couple of lines of code to get began. In this video I present you the way to use a free Chrome extension referred to as Linkclump to shortly copy Google search results to a Google sheet. This is the best way I know tips on how to copy hyperlinks from Google. I've been using it (the search engine scraper and the recommend one) in multiple project. Once in a 12 months or so it stops working because of modifications of Google and is often up to date inside a couple of days. The last time I looked at it I was utilizing an API to go looking via Google.

Explode your B2B sales with our Global Vape Shop Database and Vape Store Email List. Our Global Vape Shop Database contains contact details of over 22,000 cbd and vape storeshttps://t.co/EL3bPjdO91 pic.twitter.com/JbEH006Kc1

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

I can find the information after I do examine in a browser, I can see there's a desk for it, however I get misplaced within the a number of div reference that it has. I’ve spent hours trying to determine it out without success. It’s better to maneuver the file to OneDrive for Business and avoid this method. To check if it is attainable, try to discover your Excel information within the browser’s HTML supply, when you view the supply. Finally, by drilling down the Table objects within the Query Editor that match the tags in the browser, we reached an important step. Should we get banned we will be presented with a HTTP Error and may we have some type of connection problem we are going to catch this using the generic requests exception. Now we now have grabbed the HTML we have to parse this html. Parsing the HTML, will allow us to extract the weather we would like from the Google results web page. For this we are utilizing BeautifulSoup, this library makes it very easily to extract the info we want from a webpage. This can also be the place where we import Mako and Requests libraries. The Mako templating engine is a Python-primarily based, HTML templating library for producing HTML pages. The Python script uses this library to generate the final SERP listing page. With this script, you'll be able to keep a tab on the search rankings of the keywords of your alternative.

- You can download that file on your PC and use that anywhere.

- Being powered by an intelligent parser, our Google search outcomes API reliably supplies all SERP parts.

- If something can’t be found in Google it nicely can imply it isn't value discovering.

- Google is at present’s entry level to the world greatest useful resource – information.

We then escape our search time period, with Google requiring that search phrases containing spaces be escaped with a addition character. We then use string formatting to build up a URL containing all of the parameters initially passed into the function. I am attempting to get to the info of the second chart on this webpage.  The Search Result API all the time provides you with enough efficiency, no matter how high request quantity could be. Sign up for our free plan and scrape up to 50 search end result pages / month. You don’t have to code in Python or use complicated regex guidelines to scrape the info of every page. It first checks for person input equipped as arguments to the script. Afterward, it invokes the trigger_api and generate_serp to generate the SERP outcome HTML file. To start utilizing the Google Search API, you’ll first need to join a free RapidAPI developer account. With this account, you get a universal API Key to access all APIs hosted in RapidAPI. Using the requests library, we make a get request to the URL in question. We also move in a User-Agent to the request to keep away from being blocked by Google for making automated requests. Without passing a User-Agent to a request, you are likely to be blocked after only a few requests. While this isn’t onerous to construct from scratch, I ran across a few libraries which are easy to make use of and make things a lot simpler. If you do information mining again, now Google will use bigger weapons.

The Search Result API all the time provides you with enough efficiency, no matter how high request quantity could be. Sign up for our free plan and scrape up to 50 search end result pages / month. You don’t have to code in Python or use complicated regex guidelines to scrape the info of every page. It first checks for person input equipped as arguments to the script. Afterward, it invokes the trigger_api and generate_serp to generate the SERP outcome HTML file. To start utilizing the Google Search API, you’ll first need to join a free RapidAPI developer account. With this account, you get a universal API Key to access all APIs hosted in RapidAPI. Using the requests library, we make a get request to the URL in question. We also move in a User-Agent to the request to keep away from being blocked by Google for making automated requests. Without passing a User-Agent to a request, you are likely to be blocked after only a few requests. While this isn’t onerous to construct from scratch, I ran across a few libraries which are easy to make use of and make things a lot simpler. If you do information mining again, now Google will use bigger weapons.

Global Vape And CBD Industry B2B Email List of Vape and CBD Retailers, Wholesalers and Manufacturershttps://t.co/VUkVWeAldX

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Vape Shop Email List is the secret sauce behind the success of over 500 e-liquid companies and is ideal for email and newsletter marketing. pic.twitter.com/TUCbauGq6c

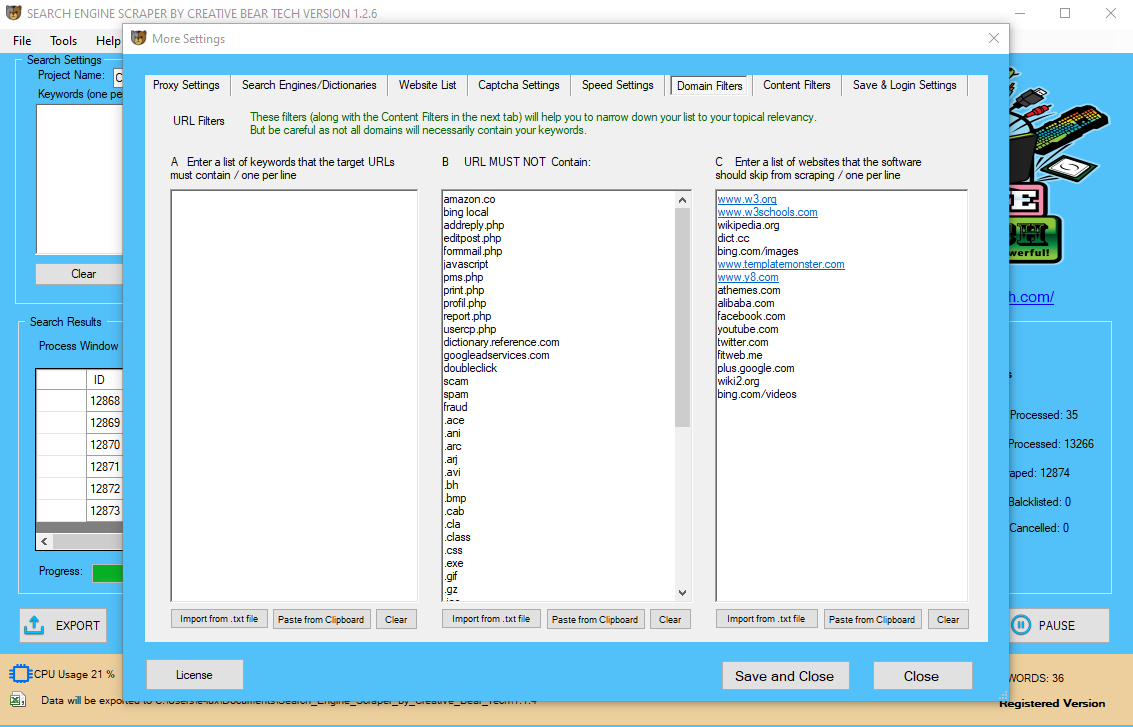

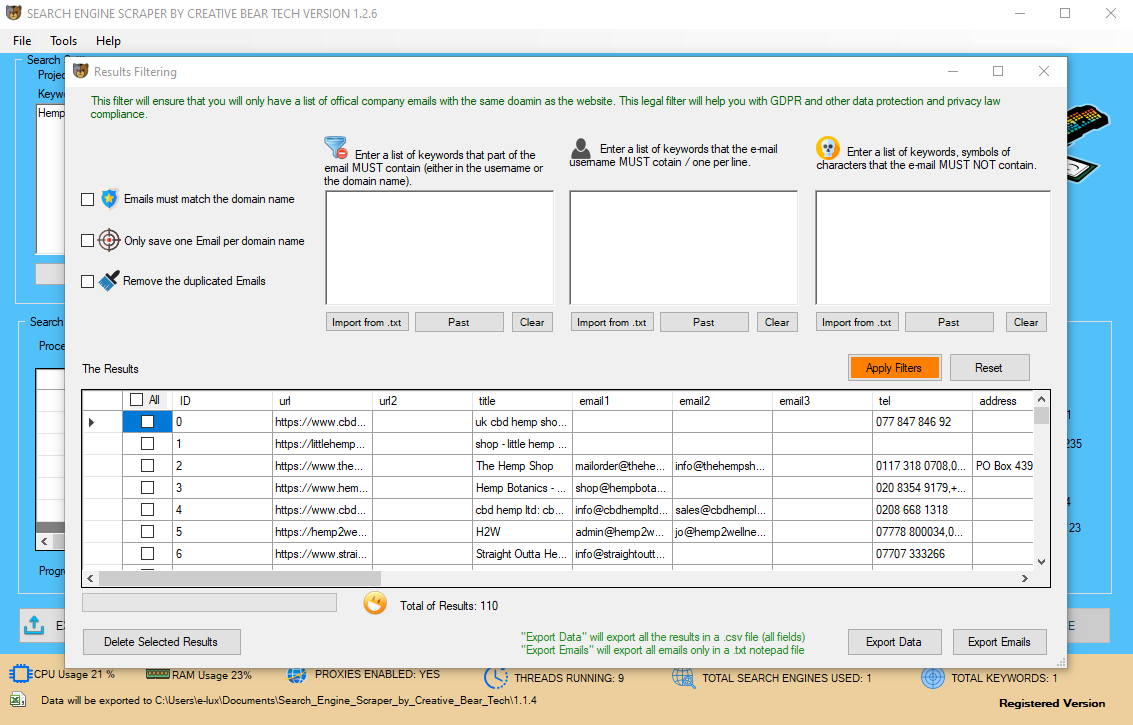

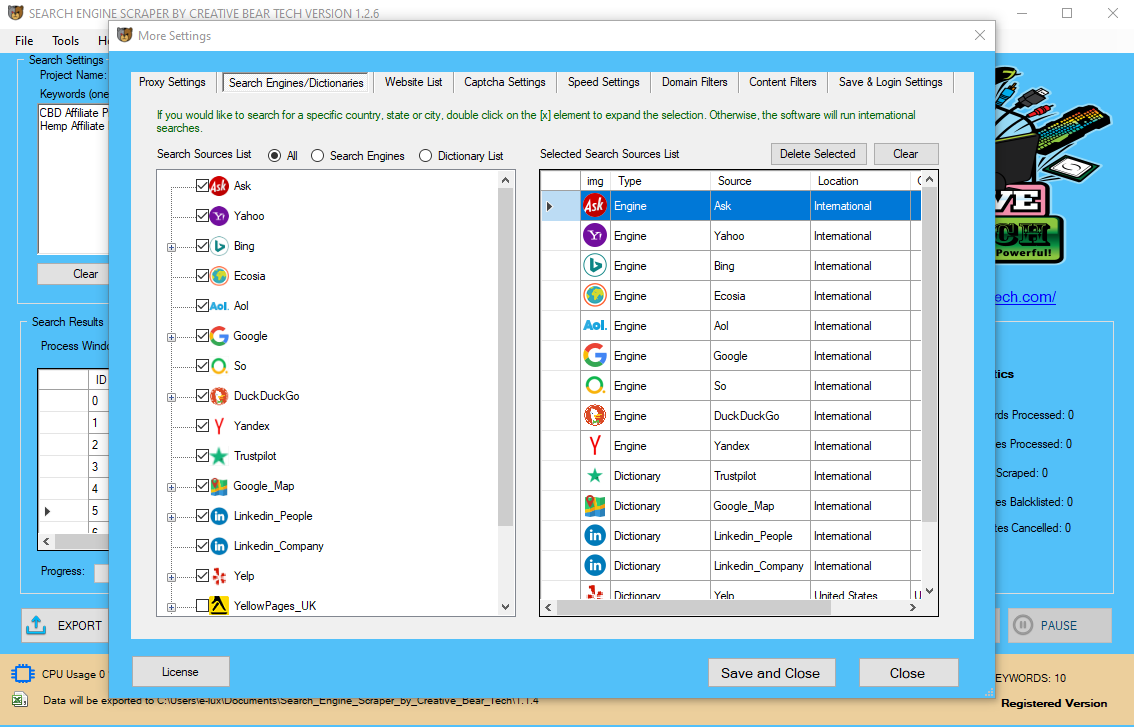

So we offer harvester statistics so you possibly can log how many outcomes have been obtained for every keyword in every search engine. Detailed statistics could be obtained when harvesting, we understand not everybody desires to scrape millions of URL’s. You can add nation based mostly search engines like google and yahoo, and even create a custom engine for a WordPress web site with a search field to reap all of the publish URL’s from the web site. This explicit example will only get the first page of outcomes. However, I have written async Python library which supports multi page scraping. For writing the outcomes to a CSV file, I would counsel you check out the csv module contained within Python’s commonplace library. The module permits you write dictionaries out to a CSV file. I’ve noticed Google mobile shows a slightly different code, and tag lessons are fairly random. You need to scale back the rate at which you might be scraping Google and sleep between every request you make. Or alternatively you can also make use of proxies and rotate them between requests.

Kick Start your B2B sales with the World's most comprehensive and accurate Sports Nutrition Industry B2B Marketing List.https://t.co/NqCAPQqF2i

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Contact all sports nutrition brands, wholesalers and manufacturers from all over the world in a click of a button. pic.twitter.com/sAKK9UmvPc

You can clone or download the complete script over at the git repo. Google is moving from a Search Engine to an Answer Engine. With the brand new release of thruuu, a free SEO tool, you can analyze as much as a hundred pages of the Google SERP, and extract (without any line of code) all the key info. So go forward and get immersed within the sea of search outcome analytics till we're again soon with yet another interesting demo of an API hosted in RapidAPI. To construct the URL, we properly format the query and put it into the q parameter. In one click on, you will get all of the SERP knowledge in an excel file. In Navigator dialog, choose the desk Countries and areas ranked by population… and click on Edit, to clean the info in the Query Editor earlier than it is loaded to your report. We will reveal the method by internet scraping the Microsoft Find an MVP search outcomes right here. Each time a brand new SERP kind is launched, it will get added to the API to broaden outcomes even more. For the above, I’m using google.comfor the search and have advised it to stop after the first set of results. For example, when searching for a Sony 16-35mm f2.8 GM lenson google, I wished to seize some content material (evaluations, textual content, etc) from the outcomes. There are numerous totally different errors that could be thrown and we glance to catch all of those attainable exceptions. Firstly, if you pass knowledge for the mistaken sort to the fetch results operate, an assertion error might be thrown. If I recall accurately that limit was at 2.500 requests/day. Even if it does throw up correct results, it’s nonetheless lots of handbook work. I am an enormous fan of saving time, so here’s what you should find out about using SERP API. The Batches API permits you to create, update and delete Batches in your SerpWow account (Batches allow you to save up to 15,000 Searches and have SerpWow run them on a schedule). Use the search_type param to go looking Google Places, Videos, Images and News. See the Search API Parameters Docs for full particulars of the additional params out there for every search type. Zenserp.com allows you to obtain location based mostly and geolocated search engine results. Our API returns search ends in handy JSON format, that's straightforward to integrate in any software. They go so far as to block your IP should you automate scraping of their search results. I’ve tried nice scraping tools like Import.io with no luck. This is especially the case when you’re trying to tug search results from pages that Google hides as duplicates. Searchresultapi offers a wide range of search end result types in order to allow builders to resolve every possible use case. View our video tutorial exhibiting the Search Engine Scraper in motion. This characteristic is included with ScrapeBox, and is also appropriate with our Automator Plugin.